Is Title Insurance Required by Law?

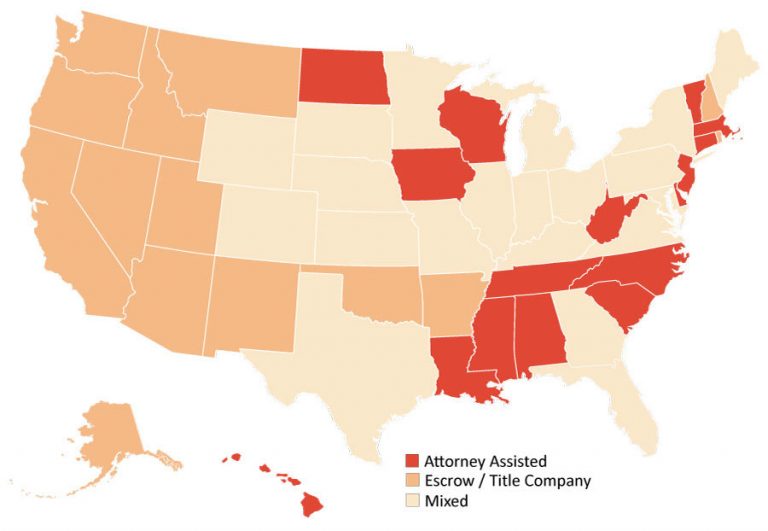

Title insurance is not required by law, however almost all lenders will require a lender’s title insurance policy as a condition of making their loan. A lender wants to protect their interest in the property and a title insurance policy is one way in which they can do this. In addition, Buyers should always insist upon an owner’s title policy to protect their equity in the property. Local custom often dictates who pays for the policy of title insurance, although this may be a negotiable item in your closing.